Artificial Intelligence (AI) has become an integral part of our daily lives, from virtual assistants like Siri and Alexa to more complex applications such as autonomous vehicles and predictive analytics in healthcare. However, with its increasing presence, the question of trust in AI arises. How far do people trust AI, and what factors influence this trust? At UIUX Studio, we embarked on a comprehensive research project to explore these questions and uncover the nuances of human-AI trust dynamics.

The Importance of Trust in AI

Trust is a critical component in the adoption and successful integration of AI technologies. Without trust, users are unlikely to engage fully with AI applications, potentially hindering the benefits these technologies can offer. Understanding the levels of trust people have in AI and the factors that affect it can help designers, developers, and policymakers create more reliable and user-friendly AI systems.

Research Methodology

Our research methodology was designed to gather a wide range of insights through multiple channels:

1. Surveys

We conducted extensive surveys to gather quantitative data on people’s perceptions and trust levels in various AI applications. The surveys included questions on:

- General awareness and understanding of AI

- Trust levels in specific AI applications (e.g., healthcare, finance, autonomous vehicles)

- Factors influencing trust (e.g., transparency, reliability, previous experiences)

- Demographic information to analyze trust variations across different groups

2. Interviews

In-depth interviews provided qualitative insights into personal experiences and attitudes towards AI. These interviews helped us understand the context behind the survey data and explore specific concerns and expectations users have about AI.

3. Case Studies

We analyzed case studies of well-known AI applications, focusing on instances where trust was successfully built or lost. These case studies included:

- Healthcare AI systems

- Autonomous vehicles

- AI in financial services

- Consumer AI products (e.g., smart home devices)

4. Literature Review

We reviewed existing research and literature on AI trust to contextualize our findings within the broader field of AI and human-computer interaction studies.

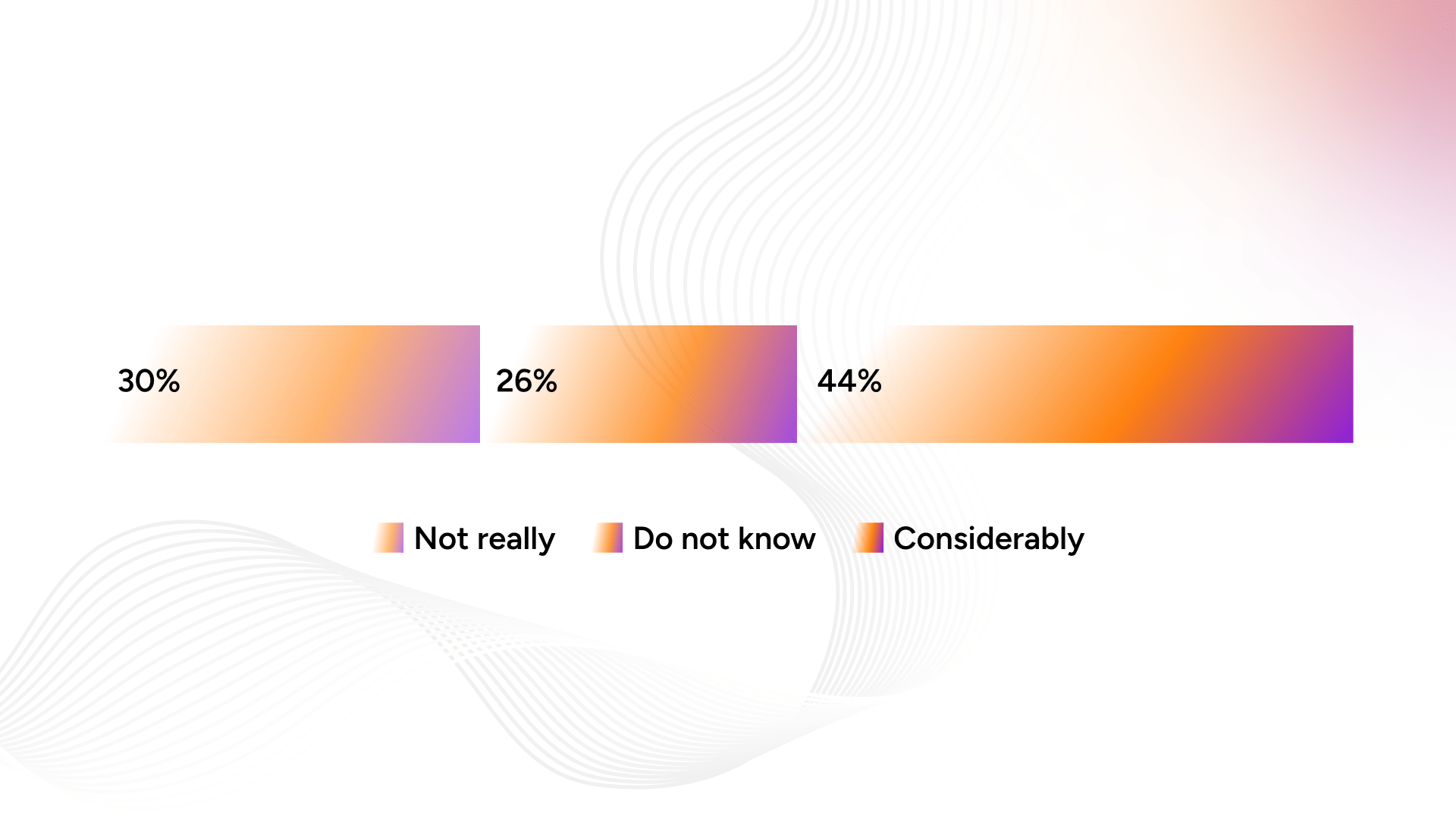

Key Findings

1. Trust Levels Vary by Application

Our research revealed that trust levels in AI significantly vary depending on the application. For instance:

- High Trust: Healthcare AI and financial analytics were generally trusted more due to perceived benefits and rigorous testing standards.

- Low Trust: Autonomous vehicles and AI in law enforcement faced more skepticism due to safety concerns and ethical implications.

2. Transparency and Explainability

One of the most significant factors influencing trust was transparency. Users were more likely to trust AI systems that provided clear explanations of how decisions were made. Black-box AI models, where the decision-making process is opaque, generated more distrust.

3. Reliability and Performance

Consistency and reliability in AI performance were crucial for building trust. Users expressed higher trust in AI systems that demonstrated consistent accuracy and reliability over time.

4. Previous Experiences

Personal or vicarious experiences with AI significantly impacted trust levels. Positive experiences with reliable AI systems led to higher trust, while negative experiences or reports of AI failures reduced trust.

5. Ethical and Privacy Concerns

Ethical considerations, such as bias in AI decision-making and data privacy, were major factors affecting trust. Users were wary of AI systems that might compromise their privacy or exhibit biased behavior.

Implications for AI Design and Development

1. Prioritize Transparency

Design AI systems with transparency in mind. Providing users with clear, understandable explanations of how AI decisions are made can significantly enhance trust.

2. Ensure Reliability

Focus on building reliable AI systems that perform consistently well. Rigorous testing and validation are essential to demonstrate reliability to users.

3. Address Ethical Concerns

Incorporate ethical considerations into the design and development process. Mitigating bias and ensuring data privacy can help build trust in AI systems.

4. Foster Positive User Experiences

Encourage positive interactions with AI by designing user-friendly interfaces and providing excellent customer support. Positive experiences can build a foundation of trust that encourages broader acceptance and use of AI technologies.

5. Continuous User Education

Educate users about AI capabilities and limitations. A well-informed user base is more likely to understand and trust AI systems.

Conclusion

Trust in AI is a multifaceted issue influenced by various factors, including transparency, reliability, personal experiences, and ethical considerations. At UIUX Studio, our research highlights the importance of designing AI systems that prioritize these factors to build and maintain user trust. As AI continues to evolve, fostering trust will be crucial for its successful integration into society.

By understanding how far people trust AI and what influences this trust, we can create AI technologies that are not only advanced and efficient but also trusted and embraced by users. If you’re interested in learning more about our research or how UIUX Studio can help you design trustworthy AI systems, feel free to reach out to us. Together, we can shape the future of AI in a way that is beneficial and trusted by all.